This is the first of a two-part blog post.

Use Case

I am working on a Financials system running on an engineered system. It runs a daily batch of GL reports on summary ledgers that have unindexed materialized views. The materialized views are also hybrid column compressed (HCC) to reduce their size and improve reporting performance.

We also put the materialized views into the In-Memory store. Initially, we used 'free' base-level In-Memory and worked within the 16Gb/instance limit. Having moved to Exadata Cloud@Customer, we can use the fully licensed version of In-Memory.

Now I have introduced Attribute Clustering for the materialized views.

Attribute Clustering

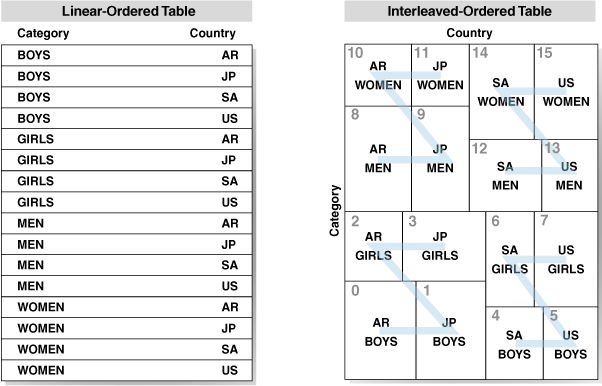

Attribute Clustering has been available on Enterprise Edition since Oracle 12.1.0.2. Data is clustered in close physical proximity according to certain columns. Linear Ordering stores the data according to the order of the specified clustering columns. Interleaved Ordering uses a Z-order curve to cluster data in multiple dimensions (this graphic is from Oracle's documentation).The GL reports have multiple combinations of different predicates. Therefore, as recommended by Oracle, we used interleaved ordering. Linear ordering is not suitable in this case because there is no single suitable order for each table. Linear ordering also caused the runtime of the materialized view refresh to extend much more than interleaved ordering as it has to sort the data.

We have not introduced Zone Maps. That is to say that after testing, we removed them. Zone maps can be thought of as a coarse index of the zones in the attribute clustering, and would normally be expected to improve the access of the data. You can see them being used in the execution plans to access the table both in the tablespace and in the In-Memory store. However, our application dynamically generates a lot of SQL and therefore performs a lot of SQL parse. We found that the additional work to process the zone map significantly degraded performance.

Attribute Clustering is not enforced for every DML operation. It only affects direct-path insert operations, data movement, or table creation. It is easy to implement it for segments that are already HCC, which also relies on direct-path operations. The materialized views were created to introduce HCC, hence they are refreshed in non-atomic mode which truncates and repopulates them in direct-path mode. Thus attribute clustering specified on the materialized views will be implemented as they refresh.

Historical, and therefore static, partitions in the ledger tables are marked for HCC, and we schedule an online rebuild to compress them. Now, that will also apply attribute clustering. This process could be automated with Automatic Storage Compression.

Compression

Simply by storing similar data values together, we obtained better compression from HCC. The tables underlying the materialized views were smaller.

In-Memory also uses columnar compression. Attribute clustering produced a reduction in the size of segments in the In-Memory store. If we were still working within the constraints of Base-Level In-Memory, we would have been able to store more segments in In-Memory.

We are using attribute clustering not to directly improve data access, but to harness a secondary effect, that of improved compression. We are seeing a reduction in the runtime of the reports. Most of the database time is already spent on the CPU (as most of the fact tables are in In-Memory), so this translates to a reduction in CPU consumption. We can consider running more reports concurrently to complete the batch earlier. We can also consider reducing the number of CPUs and therefore reduce cloud subscription costs.

No comments :

Post a Comment